Three Criminal Responsibility Paradigms for Artificial Intelligence Agency

Can a harmful artificial intelligence (AI) agent be held directly criminally responsible? This project seeks a new way to address this recurring question. Instead of trying to ascertain whether AI agents could ever be our moral duplicates, responsive as we are to blame and punishment, it asks whether AI agents can be deterred – like the mythical “homo economicus” of the economic theory of crime – or morally educated towards harm-reduction – like our children.

The core idea behind the three paradigms of criminal responsibility is that the conceptual dualism in criminal law between “persons” and “things” cannot grasp AI agency, which is situated “in between” these two categories: autonomous AI agents are neither “full persons” nor “mere things.” The insight of the research is that their status is more fittingly captured by H.L.A. Hart’s interpretation of the utilitarian definition of the criminal law’s person. In Hart’s critical appraisal, strict utilitarians viewed persons as mere “alterable, predictable, curable or manipulable things.” This is perhaps not a good way to talk about human beings, but it is an apt description of the new form of non-human agency that AI represents. Building on this insight and on the premise that the normative view of the criminal law’s person derives from the function of criminal law itself, the research will explore three criminal responsibility paradigms for AI agency, namely, the blame paradigm, the economic paradigm, and the rehabilitative paradigm.

| Expected outcome: | Dissertation |

|---|---|

| Project language: | English |

| Timeframe: | approx. 09/2022 – 12/2025 |

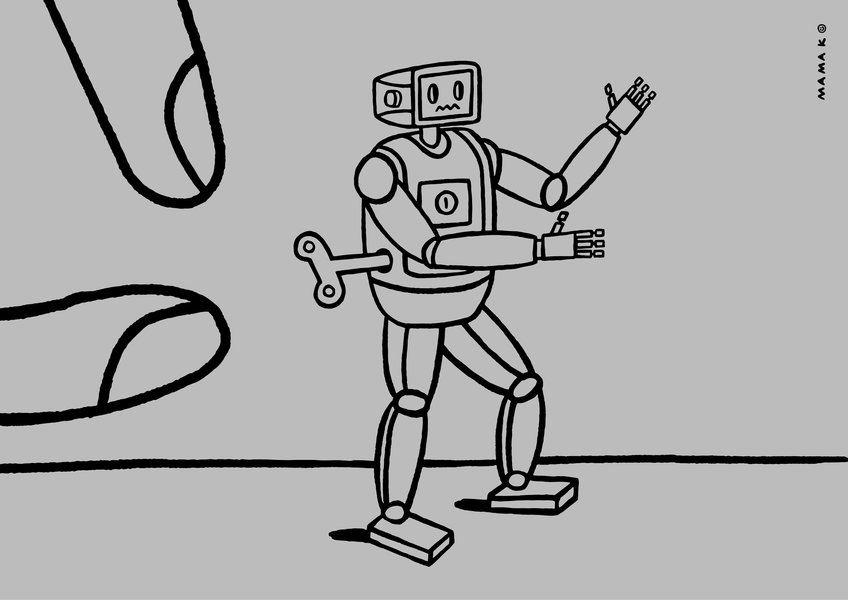

| Graph: | © Mamak |