Due Diligence Requirements for Automated Anti-Money Laundering Systems

Exploring Ways to Limit Criminal Liability When Using Artificial Intelligence

With the advent of artificial intelligence (AI) systems, new players are entering the corporate arena. While they offer many advantages, they also pose new risks. Using the example of AI systems for anti-money laundering, this doctoral project will analyze the potential criminal liability of managers and companies who use AI systems and – based on this analysis – develop due diligence requirements for the use of AI systems in companies.

AI systems can be expected to perform various tasks in companies better and more efficiently than ever before. This is particularly true in the area of anti-money laundering: compared to the rule-based systems in use today, AI systems promise both significant improvements in the detection of suspicious transactions as well as a considerable increase in efficiency. However, AI systems also entail new risks: First of all, they are usually a black box for users, that is, their automated decision-making is neither predictable ex ante nor explainable ex post. Also, AI systems may make mistakes. Mistakes that are criminally relevant are particularly serious: if, for example, an AI system fails to detect a suspicious transaction, a bank or securities firm can be prosecuted.

The aim of this project is, first, to analyze the potential criminal liability under Swiss law of managers and companies that use AI systems. In a second step, the project will define limits to criminal liability by elaborating due diligence requirements for the use of AI systems. In addition to building on the aforementioned legal analysis, this step will incorporate findings from an in-depth examination of the approach taken in Luxembourg to the deployment of AI systems in the fight against money laundering.

By elaborating due diligence requirements for the use of AI systems in companies, the project aims to close a gap in criminal liability. It will also contribute to the resolution of fundamental legal issues related to socially acceptable risks as well as to the clarification of a very important practical question, namely, when and under what conditions AI systems should be deployed – in corporate environments in general and in the banking sector in particular.

| Expected outcome: | Dissertation |

|---|---|

| Project language: | German |

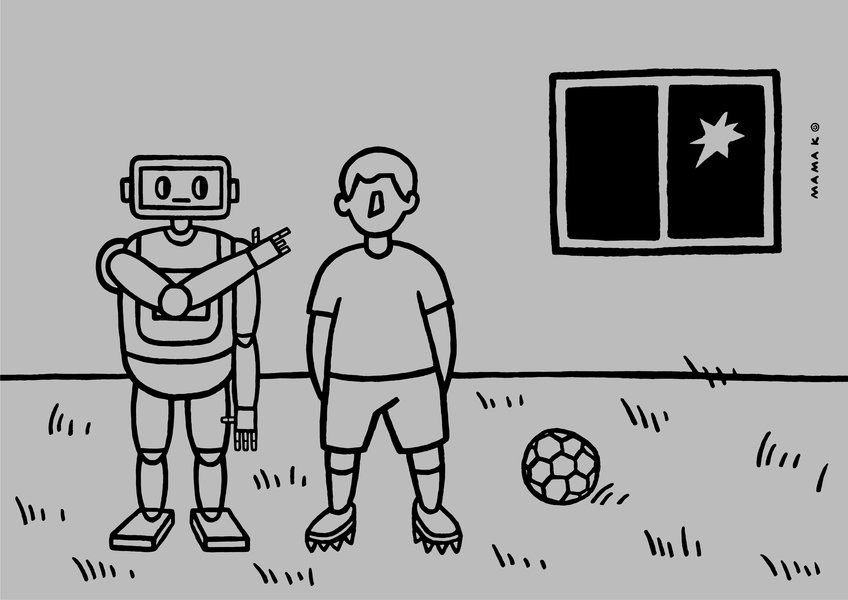

| Graph: | © Mamak |